Northwestern

University, Pennsylvania State University, Loyola University

|

|

Ultra-scalable system software and tools for data-intensive computing Alok Choudhary1,

Gokhan Memik1, Seda Memik1, Mahmut T. Kandemir2,

George Thiruvathukal3 1 Department of EECS,

Northwestern University 2 Department of CSE, Penn

State 3 Department of CS, Loyola

University |

Abstract

This project entails research and development and to

address the software and tools problems for ultra-scale parallel machines,

especially targeted for scalable I/O, storage and memory hierarchy. The

fundamental premise is that to achieve extreme scalability, incremental changes

or adaptation of traditional (extension of sequential) interfaces and

techniques for scaling data accesses and I/O will not succeed, because they are

based on pessimistic and conservative assumptions of parallelism,

synchronization, and data sharing patterns. We will develop innovative

techniques to optimize data access that utilize the understanding of high-level

access patterns ("intent"), and use that information through runtime

layers to enable optimizations and reduction / elimination of locking and

synchronization at different levels. The proposed mechanisms will allow

different software layers to interact/cooperate with each other. Specifically,

the upper layers in the software stack extract high-level access pattern

information and pass it to the lower layers in the stack, which in turn exploit

them to achieve ultra-scalability. In particular, the main objectives of this

project are: (1) Techniques, tools and software for extracting data access

patterns and data-flow at runtime; (2) Interfaces and strategies for passing

access pattern across the different layers for optimizations; (3)

Implementation of these techniques in appropriate layers such as parallel file

system, communication software 9e.g., MPI2), and runtime libraries to reduce or

eliminate synchronization and locking; (4) Runtime techniques and tools that

exploit access patterns for reducing power consumption and cooling requirements

for the underlying storage system; and (5) Development of interfaces and

software to use active storage for data analysis and filtering.

Problem

Description:

As high performance computing (HPC) system sizes and

capabilities approach extreme-scale ranges, scales of problems and applications

that were unimaginable a few years ago are within reach. Such problems by definition

are extremely data intensive, especially given that byte/FLOP ratio of most

science and engineering applications is expected to either remain constant or

increase. Data-intensive applications come from all types of domains including

astrophysics, climate and operational weather modeling, nanoscience, materials,

combustion, cosmology, bioinformatics and drug design, nuclear simulations,

sensor data processing, security, and others. However, the problem of

performance, productivity, and portability (P3) must be addressed in a holistic

manner considering the entire science and engineering application design and

execution workflow in order to reach the potential benefits of extreme-scale

systems. Data that is produced, consumed, handled, visualized, analyzed and

mined is reaching hundreds of terabytes to petabytes range (raw data, derived

images, movies, streams, metadata, mined data, etc.) for most application

domains. Thus, along with computational capabilities, ultra-scale software to

scale I/O, storage, memory hierarchy performance must be developed in order to

address data intensive nature of applications and reap benefits in performance

and productivity of extreme-scale systems. From the P3

perspective, all components and data flow among those components must scale in

order to achieve extreme scalability. For data-intensive applications, the I/O,

storage layers, and memory hierarchy are extremely critical, and are often

overlooked, but they tend to become a bottleneck in not only obtaining scalable

performance but also in achieving high productivity.

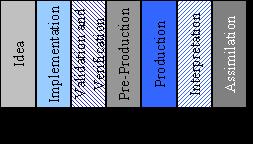

A fundamental goal of an application scientist/engineer is to design, analyze and understand experimental, observational and computational data. As system sizes and capabilities approach peta-scale range, scales of problems that were unimaginable a few years ago are within reach. However, the problem of performance, productivity, and portability (P3) must be addressed in a holistic manner in order to reach the potential benefits of ultrascale systems. A typical large-scale application entails many stages of computation and analysis with several feedback loops. Figure 1 presents various illustrative stages including, Idea/Problem Formulation Stage, Implementation Stage, Validation/Verification Stage (intermixed with the previous stage), Production Stage, Interpretation/Analysis Stage, and Assimilation Stage. There is a constant feedback loop for refinement and improvement within an application domain. As the data grows to peta-scale, these steps become prohibitively slow. Therefore, in order to improve P3, this entire process must be considered in developing techniques, software and tools for ultrascale system.

Figure 1. A simplified example of stages of an

application in science and engineering for large-scale systems. In reality,

there are multiple fragmentations, intermixing and feedback cycles between the

stages.

Methodology:

A typical large-scale data-intensive application will use several layers of software. For example, an operational weather modeling application may use NetCDF for data representation and storage, which in turn may be implemented in parallel using MPI-IO for portability, which may be layered on top of Parallel Virtual File System (PVFS) on a cluster. The highest layer normally has semantic information associated with it, which tends to include the intent of a user. For example, at the NetCDF layer, the user may have represented a three-dimensional structure with a specific distribution of data (each element of which may be a collection of variables such as temperature, pressure, density, humidity, etc.). These relationships and semantic information are lost in the lower layer (e.g., MPI-IO), where even though multidimensional arrays may be represented, high-level view of data gets lost. Further down, even this information gets lost, and data is a stream of bytes. However, since this layer interacts with the architecture, network and storage system, the interfaces and optimizations have to account for worst-case scenarios for conflicts, synchronization, locking, coherence checks and other issues, which adversely affect the performance significantly. In other words, in this conventional design, entire software stack and layers are designed pessimistically, rather than optimistically. The task of optimizations in such complex systems is left to the user, who must specify how to perform the scalable parallel operations for data access.

A user needs to consider a variety of properties of an application including type of data access, access patterns, parallelism, type of I/O and storage system, software layer and interfaces and others. Furthermore, even if the user was able to figure out the right combination for a particular configuration, changing configuration (processors, network, architecture features, software) results in optimizations being no longer applicable, thereby adversely affecting performance, productivity, and portability (P3). In order to significantly improve and scale the P3, we must design and develop interfaces, software, runtime systems that let user specify what the intent is and what the requirement of a task is rather than how to accomplish it. The techniques, optimizations and their implementations then should understand the intent and determine the right strategy at runtime. In this project, we investigate such innovative techniques, specifically oriented towards the I/O subsystem and deep memory hierarchies. The aim is to use high-level semantics in optimization with light-weight low-level software (e.g., file system or communication within memories) to scale performance by reducing or eliminating synchronization and locking by guaranteeing certain events and access patterns to the layers below. The same access pattern information will also be used for other functions including power-management of storage system, use of non-volatile memory within user space to significantly reduce latency and improve bandwidth and for data movement across distributed memories.

Publications:

·

Exploiting inter-file access patterns using

multi-collective I/O

Gokhan Memik, Mahmut T. Kandemir, Alok Choudhary

In Proc. of USENIX Conference on File and Storage Technologies (FAST),

Monterey / CA, January, 2002.

Sponsored by:

|

|

NSF, Software and Tools for High-End Computing (ST-HEC) Program, Contract No. 0444405, 0444158, 0444197 |